DMLab-30 is a set of environments designed for DeepMind Lab. These environments enable a researcher to develop agents for a large spectrum of interesting tasks either individually or in a multi-task setting.

rooms_collect_good_objects_{test,train}rooms_exploit_deferred_effects_{test,train}rooms_select_nonmatching_objectrooms_watermazerooms_keys_doors_puzzlelanguage_select_described_objectlanguage_select_located_objectlanguage_execute_random_tasklanguage_answer_quantitative_questionlasertag_one_opponent_smalllasertag_three_opponents_smalllasertag_one_opponent_largelasertag_three_opponents_largenatlab_fixed_large_mapnatlab_varying_map_regrowthnatlab_varying_map_randomizedskymaze_irreversible_path_hardskymaze_irreversible_path_variedpsychlab_arbitrary_visuomotor_mappingpsychlab_continuous_recognitionpsychlab_sequential_comparisonpsychlab_visual_searchexplore_object_locations_smallexplore_object_locations_largeexplore_obstructed_goals_smallexplore_obstructed_goals_largeexplore_goal_locations_smallexplore_goal_locations_largeexplore_object_rewards_fewexplore_object_rewards_many

The agent must learn to collect good objects and avoid bad objects in two environments. During training, only some combinations of objects/environments are shown, hence the agent could assume the environment matters to the task due to this correlational structure. However it does not and will be detrimental in a transfer setting. We explicitly verify that by testing transfer performance on a held-out objects/environment combination. For more details, please see: Higgins, Irina et al. "DARLA: Improving Zero-Shot Transfer in Reinforcement Learning" (2017).

Test Regime: Test set consists of held-out combinations of objects/environments never seen during training.

Observation Spec: RGBD

Level Name: rooms_collect_good_objects_{test,train}

This task requires the agent to make a conceptual leap from picking up a special object to getting access to more rewards later on, even though this is never shown in a single environment and is costly. Expected to be hard for model-free agents to learn, but should be simple when using some model-based/predictive strategy.

Test Regime: Tested in a room configuration never seen during training, where picking up a special object suddenly becomes useful.

Observation Spec: RGBD

Level Name: rooms_exploit_deferred_effects_{test,train}

This task requires the agent to choose and collect an object that is different from the one it is shown. The agent is placed into a small room containing an out-of-reach object and a teleport pad. Touching the pad awards the agent with 1 point, and teleports them to a second room. The second room contains two objects, one of which matches the object in the previous room.

- Collect matching object: -10 points.

- Collect non-matching object: +10 points.

Once either object is collected the agent is returned to the first room, with the same initial object being shown.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: rooms_select_nonmatching_object

The agent must find a hidden platform which, when found, generates a reward. This is difficult to find the first time, but in subsequent trials the agent should try to remember where it is and go straight back to this place. Tests episodic memory and navigation ability.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: rooms_watermaze

A procedural planning puzzle. The agent must reach the goal object, located in a position that is blocked by a series of coloured doors. Single use coloured keys can be used to open matching doors and only one key can be held at a time. The objective is to figure out the correct sequence in which the keys must be collected and the rooms traversed. Visiting the rooms or collecting keys in the wrong order can make the goal unreachable.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: rooms_keys_doors_puzzle

For details on the addition of language instructions, see: Hermann, Karl Moritz, & Hill, Felix et al. "Grounded language learning in a simulated 3D world. (2017)".

The agent is placed into a small room containing two objects. An instruction is used to describe one of the objects. The agent must successfully follow the instruction and collect the goal object.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD and language

Level Name: language_select_described_object

The agent is asked to collect a specified coloured object in a specified coloured room. Example instruction: “Pick the red object in the blue room.” There are four variants of the task, each of which have an equal chance of being selected. Variants have a different amount of rooms (between 2-6). Variants with more rooms have more distractors, making the task more challenging.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD and language

Level Name: language_select_located_object

The agent is given one of seven possible tasks, each with a different type of language instruction. Example instruction: “Get the red hat from the blue room.” The agent is rewarded for collecting the correct object, and penalised for collecting the wrong object. When any object is collected, the level restarts and a new task is selected.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD and language

Level Name: language_execute_random_task

The agent is given a yes or no question based on object colors and counts. The agent selects a certain object to respond:

- White sphere = yes

- Black sphere = no

- Example questions:

- “Are all cars blue?”

- “Is any car blue?”

- “Is anything blue?”

- “Are most cars blue?”

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD and language

Level Name: language_answer_quantitative_question

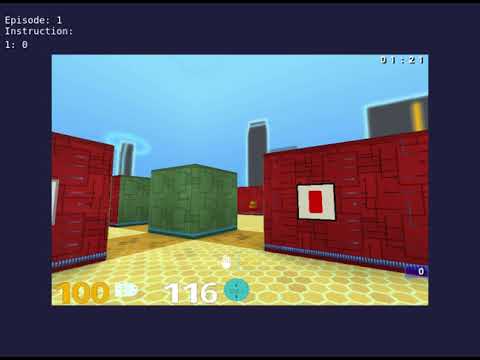

This task requires the agent to play laser tag in a procedurally generated map containing random gadgets and power-ups. The map is small and there is 1 opponent bot of difficulty level 4. The agent begins the episode with the default Rapid Gadget and a limit of 100 tags. The agent’s Shield will begin at 125 and slowly drop to the max amount of 100. The gadgets, powerups and map layout are random per episode and so the agent must adapt to each new environment.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: lasertag_one_opponent_small

This task requires the agent to play laser tag in a procedurally generated map containing random gadgets and power-ups. The map is small and there are 3 opponent bots of difficulty level 4. The agent begins the episode with the default Rapid Gadget and a limit of 100 tags. The agent’s Shield will begin at 125 and slowly drop to the max amount of 100. The gadgets, powerups and map layout are random per episode and so the agent must adapt to each new environment.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: lasertag_three_opponents_small

This task requires the agent to play laser tag in a procedurally generated map containing random gadgets and power-ups. The map is large and there is 1 opponent bot of difficulty level 4. The agent begins the episode with the default Rapid Gadget and a limit of 100 tags. The agent’s Shield will begin at 125 and slowly drop to the max amount of 100. The gadgets, powerups and map layout are random per episode and so the agent must adapt to each new environment.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: lasertag_one_opponent_large

This task requires the agent to play laser tag in a procedurally generated map containing random gadgets and power-ups. The map is large and there are 3 opponent bots of difficulty level 4. The agent begins the episode with the default Rapid Gadget and a limit of 100 tags. The agent’s Shield will begin at 125 and slowly drop to the max amount of 100. The gadgets, powerups and map layout are random per episode and so the agent must adapt to each new environment.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: lasertag_three_opponents_large

This is a long term memory variation of a mushroom foraging task. The agent must collect mushrooms within a naturalistic terrain environment to maximise score. The mushrooms do not regrow. The map is a fixed large-sized environment. The time of day is randomised (day, dawn, night). Each episode the spawn location is picked randomly from a set of potential spawn locations.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: natlab_fixed_large_map

This is a short term memory variation of a mushroom foraging task. The agent must collect mushrooms within a naturalistic terrain environment to maximise score. The mushrooms regrow after around one minute in the same location throughout the episode. The map is a randomized small-sized environment. The topographical variation, and number, position, orientation and sizes of shrubs, cacti and rocks are all randomized. The time of day is randomised (day, dawn, night). The spawn location is randomised for each episode.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: natlab_varying_map_regrowth

This is a randomized variation of a mushroom foraging task. The agent must collect mushrooms within a naturalistic terrain environment to maximise score. The mushrooms do not regrow. The map is randomly generated and of intermediate size. The topographical variation, and number, position, orientation and sizes of shrubs, cacti and rocks are all randomised. Locations of mushrooms are randomized. The time of day is randomized (day, dawn, night). The spawn location is randomized for each episode.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: natlab_varying_map_randomized

This task requires agents to reach a goal located at a distance from the agent’s starting position. The goal and target are connected by a sequence of platforms placed at different heights. Jumping is disabled, so higher platforms are unreachable and the agent won’t be able to backtrack to a higher platform. This means that the agent is required to plan their route to ensure they do not become stuck and fail the task.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: skymaze_irreversible_path_hard

A variation of the Irreversible Path Hard task. This version of the task will select a map layout of random difficulty for the agent to solve. The jump action is disabled (NOOP) for this task.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: skymaze_irreversible_path_varied

For details, see: Leibo, Joel Z. et al. "Psychlab: A Psychology Laboratory for Deep Reinforcement Learning Agents (2018)".

In this task, the agent is shown consecutive images with which they must remember associations with specific movement patterns (locations to point at). The agent is rewarded if it can remember the action associated with a given object. The images are drawn from a set of ~ 2500, and the specific associations are randomly generated and different in each episode.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: psychlab_arbitrary_visuomotor_mapping

This task tests familiarity memory. Consecutive images are shown, and the agent must indicate whether or not they have seen the image before during that episode. Looking at the left square indicates no, and right indicates yes. The images (drawn from a set of ~2500) are shown in a different random order in every episode.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: psychlab_continuous_recognition

Two consecutive patterns are shown to the agent. The agent must indicate whether or not the two patterns are identical. The delay time between the study pattern and the test pattern is variable.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: psychlab_sequential_comparison

A collection of shapes are shown to the agent. The agent must identify whether or not a specific shape is present in the collection. Each trial consists of the agent searching for a pink ‘T’ shape. Two black squares at the bottom of the screen are used for ‘yes’ and ‘no’ responses.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: psychlab_visual_search

This task requires agents to collect apples. Apples are placed in rooms within the maze. The agent must collect as many apples as possible before the episode ends to maximise their score. Upon collecting all of the apples, the level will reset, repeating until the episode ends. Apple locations, level layout and theme are randomized per episode. Agent spawn location is randomised per reset.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: explore_object_locations_small

This task is the same as Object Locations Small, but with a larger map and longer episode duration. Apple locations, level layout and theme are randomised per episode. Agent spawn location is randomised per reset.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: explore_object_locations_large

This task is similar to Goal Locations Small - agents are required to find the goal as fast as possible, but now with randomly opened and closed doors. After the goal is found, the level restarts. Goal location, level layout and theme are randomized per episode. Agent spawn location is randomised per reset. Door states (open/closed) are randomly selected per reset, but a path to the goal always exists.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: explore_obstructed_goals_small

This task is the same as Obstructed Goals Small, but with a larger map and longer episode duration. Goal location, level layout and theme are randomised per episode. Agent spawn location is randomised per reset. Door states (open/closed) are randomly selected per reset, but a path to the goal always exists.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: explore_obstructed_goals_large

This task requires agents to find the goal object as fast as possible. After the goal object is found, the level restarts. Goal location, level layout and theme are randomised per episode. Agent spawn location is randomised per reset.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: explore_goal_locations_small

This task is the same as Goal Locations Small, but with a larger map and longer episode duration. Goal location, level layout and theme are randomised per episode. Agent spawn location is randomised per reset.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: explore_goal_locations_large

This task requires agents to collect human-recognisable objects placed around a room. Some objects are from a positive rewarding category, and some are negative. After all positive category objects are collected, the level restarts. Level theme, object categories and object reward per category are randomised per episode. Agent spawn location, object locations and number of objects per category are randomised per reset.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: explore_object_rewards_few

This task is a more difficult variant of Object Rewards Few, with an increased number of goal objects and longer episode duration. Level theme, object categories and object reward per category are randomised per episode. Agent spawn location, object locations and number of objects per category are randomised per reset.

Test Regime: Training and testing levels drawn from the same distribution.

Observation Spec: RGBD

Level Name: explore_object_rewards_many