-

Notifications

You must be signed in to change notification settings - Fork 2.2k

trtmodel(max batch size =2) inference time spent about 2 times than trtmodel(max batch size =1) on convolution and activation layer #1046

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

It is expected that BS=2 will take almost 2x of runtime of BS=1 because you now have 2x of math operations but the math throughput remains the same.

Could you elaborate why they will be in parallel? GPU has limited throughput for tensor operation and even at BS=1, it may be already using all the math throughput. |

|

@nvpohanh Thank you for your response so quickly, as can be seen in the reference "https://docs.nvidia.com/deeplearning/tensorrt/best-practices/index.html", in this insutruction in 2.2. Batching department describing "The most important optimization is to compute as many results in parallel as possible using batching" and "Each layer of the network will have some amount of overhead and synchronization required to compute forward inference. By computing more results in parallel, this overhead is paid off more efficiently. In addition, many layers are performance-limited by the smallest dimension in the input. If the batch size is one or small, this size can often be the performance limiting dimension. For example, the FullyConnected layer with V inputs and K outputs can be implemented for one batch instance as a matrix multiply of an 1xV matrix with a VxK weight matrix. If N instances are batched, this becomes an NxV multiplied by VxK matrix. The vector-matrix multiply becomes a matrix-matrix multiply, which is much more efficient". as mentioned above, I think convolution layer can achieve the parallel calculation for batching to increase the efficient, but batching does not affect in convolution layer in our detection model, we are so confused, where does it affect to increase the efficient? can you give me some hint? Thank you. |

|

Generally, GPU computation is more efficient when the batch size is larger. This is because when you have a lot of ops, you can fully utilize the GPUs and hide some inefficiency or overhead between ops. However, if there are already a lot of ops at BS=1 and even BS=1 is able to fully utilize the GPUs, you may not see any increase in efficiency anymore. For example, is your input size BSx3x1600x1000? This is a super large image which is expected to fully utilize even the largest GPU we have (like A100), so I don't think increasing BS gives benefit on GPU efficiency. In terms of N/V/K, in your case the "N" is already 1600x1000 at BS=1, so N=1600x1000 vs N=2x1600x1000 do not make too much difference in turns of GPU efficiency, compared to N=1 vs N=2. |

|

@nvpohanh Thank you for your response, I have a question how can I confirm if gpus has been fully utilized? for example, using the "nvidia-smi" command or any other command or tools to confirm it? Thanks in advance! |

|

Yes! You can run |

|

Hi,@nvpohanh,in our test envrionment, when doing the inference, the gpu monitored information as below: |

|

SM utilization is the GPU utilization. Ideally, you want to see all 100s for SM utilization. Could you profile it using Nsight Systems to see why GPU is sometimes idle, resulting in low SM utilization? Maybe there are gaps between batches? |

|

@nvpohanh Thanks for your response!

I have downloaded the CLI Nsight System and use the command as below to monitor our application with batchsize 1. I have below two questions, can you give me some suggestions?

The picture information that I uploaded is less, if needed I can upload the qdrep file. Thanks in advance! |

|

@nvpohanh can you help me to slove above problem? because I have little experiences about it, so I hope you can give me some suggestion, Thanks in advance. |

|

The part you showed is just the set up stage. The actual inference at the end. Maybe you can send me the qdrep file so that I can take a quick look. |

|

@nvpohanh Thank you very much. |

|

It seems that you only run one batch and time it. Is that correct? To correctly measure the latency of a batch, it is recommended that:

Thanks |

|

@nvpohanh, Thank you for your response. For step two, I could not follow your suggestion, can you give me relative source code? so I can quickly confirm it. So many trouble to bother you, I am so sorry, but you help us so |

|

@nvpohanh can you help me to reslove above question? Thank you in advance. |

Please refer to our trtexec as the example source code. The profile looks fine to me. I don't think there is any issue in TRT. There seem to be some Python code overhead between batches. You may want to optimize those if you want to fully utilize GPUs. Could you let me know if you have more questions? Thanks |

|

For example, you can remove the cuda stream synchronizes between the batches and only synchronize at the end. |

|

or replace the cudaMemcpy() with cudaMemcpyAsync(). |

|

Hi @nvpohanh |

|

@nvpohanh If you have time, can you help me to reslove above question? |

|

Hi @githublsk , Here are some examples:

@ttyio Anything else you think we can provide help with? Since this is not a TRT-specific issue. |

|

+1 for @nvpohanh 's suggestion to use Triton Inference Server, thanks! |

|

Close since no activity for more than 3 weeks, please reopen if you still have question, thanks! |

Environment:

TensorRT Version: 7.2.1

CUDA Version: 11.1

CUDNN Version: 8.0.4

Operating System + Version: ubuntu18.04

Python Version: 3.6.10

PyTorch Version: 1.7.0

##Description:

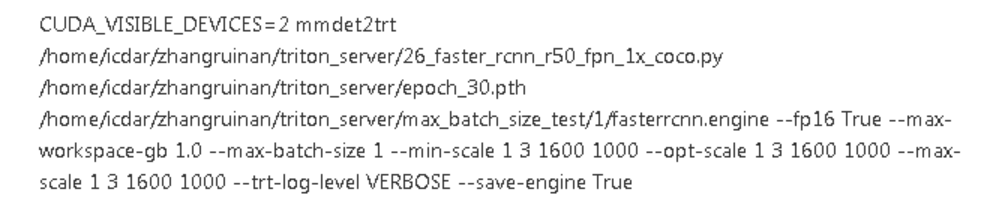

First, using the project mmdetection-to-tensorrt(link:https://github.com/grimoire/mmdetection-to-tensorrt) to convert our faster rcnn model(.pth file) directly convert to trtmodel(max batch size =1), the convert command is as follows:

Then, using same command but set max batch size =2, the convert command is as follows:

Third, using above converted trtmodels to infer with same image respectively, for trtmodel (with max batch size =2) the image repeated twice, adding the below code to record the layer time, then summarising the top20 layer time consuming for above two trtmodels as following table, from the table, for the network layers (with green identified) time consuming for the model (with max batch size =2) are almost double compared to the model(with max batch size =1), that seems unreasonable, because the network layers (with green identified) is convolution layer with tensor operation which can be considered parallel. Can you give me some suggestions? Thank you.

The text was updated successfully, but these errors were encountered: